Half-Assing a Web Game with ChatGPT and Friends

I’ve been playing around with AI tools to help create software for a while, but the upcoming UK election was the kick-in-the-behind that I needed to try something a bit more serious.

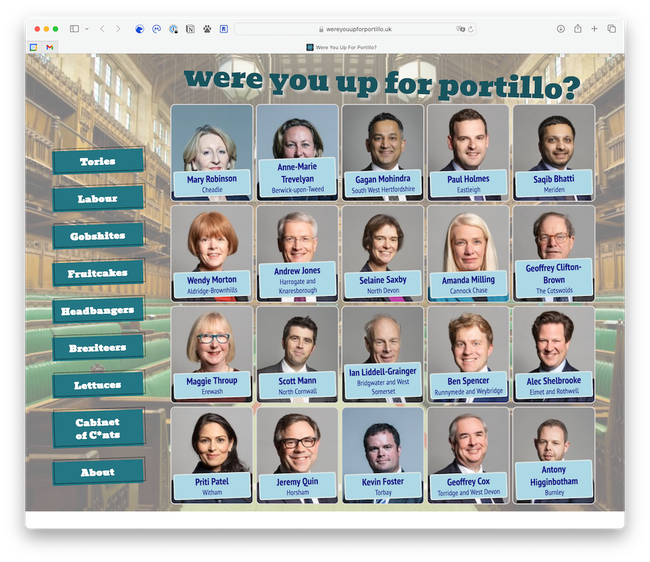

I had an idea for a silly election night distraction - a sort of bingo-style web game that you could use to track the unfolding catastrophe for the ruling Conservative Party whilst knocking back shots as prominent politicians were cast into the political oblivion.

My front end web development skills are not sufficiently up-to-date to build that in the limited time available, so I figured I’d try to achieve that by leaning on ChatGPT, Perplexity and Mistral to help me out.

It worked, as does the site (after a fashion). And the experience was a fascinating one.

There are definitely benefits in being able to get LLMs to help out:

- I was way quicker than I would have been, had I only had documentation and tutorials to call on

- I could get going with a surface understanding of what I was trying to achieve, once I’d broken the problem down into the main building blocks

- In the end, I delivered a working solution before I lost patience (this isn’t specifically LLM-related, but there is definitely a mean-time-to-giving-up with side projects like this if you can’t get results within a certain timeframe.

But there are also downsides:

- I ended up down several dead ends due to the LLM hallucinating - the sheer confidence with which an LLM delivers an answer makes it very easy to blindly cut-and-paste, and it’s not always obvious where the model lost the plot

- My awareness of hallucination potential meant it wasn’t always obvious where the LLM was right and the problem was that I’d introduced the bug

- Using a single LLM didn’t really work, because of the dead-end problem - what worked around this was having several LLMs on the go simultaneously. As they didn’t hallucinate consistently, asking the same question to another model was generally the route out of the dead-end.

In summary, then:

- I got something built quicker

- The quality is what you’d expect from an enthusiastic amateur - it’s a long way from robust, tested, well-architected production code

That last point is the main problem.

LLMs are the junk food of the software industry. We rely on them because they’re an initially satisfying quick fix, and just like junk food, you’ll suffer long-term consequences if you rely on them too much.

LLM-generated code is the result of feeding together different solutions to the same problem through a statistical mincer, and the results are not going to be consistent in terms of the small details that can make the difference between a robust code base and shoddy one.

Aspects like naming conventions, and error handling patterns, and a myriad of other seemingly-trivial factors: they might not make the different between code that works or not, but they WILL make the challenge of maintaining and extending the code much much harder.

The bigger problem, though, is with the human in the loop.

If the solution has been glued together with generated code, you’re probably won’t have the conceptual understanding of the structure that’s needed to reliably understand the solution as a whole.

Code is important to a product, but building it relies on developing and maintaining intrinsic mental models - you have to be able to conceive and visualise abstract strictures of data and flows in your minds eye.

Database records don’t exist in any meaningful physical sense, for example - to understand the structure of the data you’re working with, you have to be able to visualise abstract structures and “spin” them in different directions as you’re working with them. To a very large extent, that’s THE key skill for developers - technical knowledge will get you so far, but lacking that kind of mental ability is going to be a significant handicap.

That skill is as good a definition of general intelligence as I can think of, so you can see where I’m going here. If general intelligence is needed to build robust software, and LLMs aren’t generally intelligent by any practical measure, you can’t rely on them to write your software for you.

LLMs will speed you up, but in that sense they’re not so different to a better Google search, or improved abstractions from the underlying technology like libraries and frameworks.

I don’t expect software engineering is going to go the way of buggy whip manufacturing for a while, and hopefully not in the time I’ve got left in the industry. It will change, just like it has constantly for the last 60 or 70 years, but I wouldn’t bet on the future of an organisations who are currently rushing to replace their software developers with ChatGPT licenses.